Recently we had the pleasure of speaking to Dr Andre Oboler, an IEEE member who is a social media and online public diplomacy expert. Dr Oboler is CEO of the Online Hate Prevention Institute and a consultant on various online projects. He received his PhD in computer science from Lancaster University, UK and his LLM(JD) from Monash University, Australia. Dr Oboler has also been a Post-Doctoral Fellow in Political Science at Bar-Ilan University, Israel.

Our readers will have the ability to listen the interview podcast by clicking on the media player below or read a text version of the interview.

Audio Player

00:00

00:00

Use Up/Down Arrow keys to increase or decrease volume.

Andre tell us a little about your work with the IEEE. How did you get involved what opportunities have you been able to capitalise on as a result of it?

Like many I first became involved as a PhD student on my way to my first IEEE Conference. After my studies overseas I returned to Australia and connected with the Victorian Section. The committee put me in touch with the chair of the Computing Chapter, Dr Ron Van Schyndel, who I assisted for a year before becoming chapter chair myself. After two years in the role I was approach to serve the Computer Society in a coordinating role for Australia and New Zealand, and later as the Society’s Region 10 Coordinator. During this time I also became involved in student awards. Serving on the Victorian Section Committee I helped to judging our local student paper prizes, experience which saw me recruited to and then asked to lead the Computer Society’s global level student awards. I am now serving my second year as Vice Chair (Awards and Recognition) of the Computer Society’s Membership and Geographic Activities Board, a role in which I manage those who now run awards such as the Merwin Scholarship and the Computer Society’s Distinguished Visitor Program.

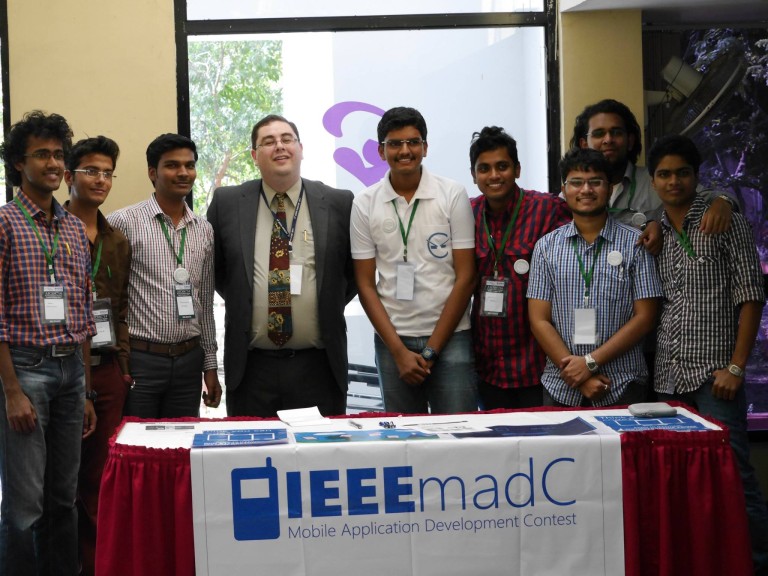

During the last few years I have helped to organise two IEEE conference, service as General Chair for one of them. I’ve also served as a Distinguished Visitor for the Computer Society speaking around the world. On one trip a travelled across India where I delivered 20 talks in 10 days to a total of over 1,500 people, most of them IEEE Members. On another trip I attended a Computer Society student congress for a local section and had the pleasure of presenting an award to an amazing educator and Branch Councillor. I’ve also mentored Student Ambassadors for the Computer Society, some of whom have gone on to do amazing things.

You are very passionate about online hate prevention. Tell us about the institute and what made you decide to tackle this major issue?

The Online Hate Prevention Institute (OHPI) is Australia’s only charity dedicated to the problem of online hate. In January 2016 OHPI turns four. I’ve been working in this area for 10 years, first exclusively in tackling antisemitism, and then more broadly in tackling all forms of online hate. The problems we face with the spread of online racism, misogyny, religious vilification, homophobia, cyberbullying etc. are to a large degree engineering problems.

As a society we know how to identify such content when we see it, but we don’t know how to respond to it in social media, or even if we should. The problem is one of scale, cross jurisdictional issues, and ethical questions where online safety and freedom of speech conflict. There is a need for more computer scientists and engineers to get involved in tackling these issues, bringing technical expertise and professional ethics to the problem.

You have been praised by diverse communities for your tireless work. You covered topics such as sexist and racial abuse against women, management of the fine balance between right to free expression and our equally important right to human dignity, anti-muslim and anti-jewish online hate. What are some of the currently hot topics in online hate prevention? Right now there’s serious concerns over incitement to violence on Facebook. This has led to Facebook appointing a local content review team in Germany, to head off criminal charges which were being considered against their management. In New York an Israeli NGO is suing Facebook over a lack of moderation of social media posts calling for stabbing attacks which have occurred in the streets in recent months. Other hot topic include online misogyny where posts promoting violence against women are causing a major back lash, anti-Muslim hate which has been growing in Australia in particular, and antisemitic hate including Holocaust denial and conspiracy theories.

To date, what has been achieved with the institute? Can you provide some measurable results, statistics, impact? We have produced the word’s first tool, fightagainsthate.com, for bringing transparency to the way social media companies respond to users’ reports. The tool is supported by 38 partner organisations, including the Australian IEEE SSIT Chapter, and has been praised by UNESCO and presented in the United Nations in New York. We have also used the tool to produce two major reports, one looking at over 2,000 items of antisemitism and the other at over 1,000 items of anti-Muslim hate. The tool itself collected over 5,400 reports of online hate during 2015.

In both reports we provide a breakdown of the hate into categories, for example, we can see that for antisemitism the most common way it is expressed is classic antisemitic such as conspiracy theories, for anti-Muslim hate the most common expression is that Muslim are a threat to our way of life. We also examine and how the social media companies have responded to users reports of this hate. The answer in short is not nearly well enough. The Antisemitism report will be released in January 2016 and the anti-Muslim hate report in March 2016.

We’re also very proud of our online community which has grown to over 21,000 supporters at facebook.com/onlinehate. Our supporters helped to remove many channels and items of online hate. This normally occurs when we put out a briefing pointing to an online account dedicated to the promotion of hate, providing examples of the hate they post, and asking our supporters to take a minute to report it. In the 2014-2015 financial year we produced 45 briefings which were shared in social media a total of 29,000 times.

What difficulties do you face day to day and in the past? One of the main difficulties we face is funding. Finding money to keep going, we are a foundation supported by charities. In Australia this is tax deductible but in other countries it is not. Never the less, people donate because they believe in the cause and our work. We also do have threats and attacks, we have often had attempts to hack our webpages. Hackers attempt to run scripts to access a database. From a technical point of view we find it quiet amusing when we put up hand coded static webpages and and hackers try running scripting to try and get to the database. No database there. We have to build our systems to be pretty robust. There is also a level of emotional pressure that comes from dealing with this sort of content. Going through examples such as women being threatened with violence and raped. We are dealing with this content all the time, people being threatened to be stabbed and shot. At the lower level it is people being excluded from society. This does take a toll on us emotionally.

So how is action being taken, if you do report various cases? Is it reported to the police and where does it go from there? We do get reports via email, via messages on facebook etc but the main way we get information, other then what we find ourselves, is through fightagainsthate.com tool. People will log in and paste the url of the offending content and this goes into a big database. This is all cloud based and built to expand and grow. We extract items from the database and use them for our briefings and reports and we also use them for confidential reports which don’t go up on our website. The confidential reports normally go directly to government, often to law enforcement agencies. Unlike our public reports where we intentionally avoid identifying individuals, the reports for government include all of this information. We often then look into where the offenders is, what jurisdiction they are in and then provide this to the correct law enforcement agency. However, we do find that law enforcement may want to take action but simply can not do so as a lack of resource. In particular they lack technical staff to combat with these crimes. These are again job opportunities for young professionals. they are looking for people across the world from a limited pool. What we can do is produce the reports such that the technical element is reduced, helping the law enforcement agencies. We also deal with counter terrorism and dealing with extremism. This content goes from us to the police.

IEEE is a multiculturally diverse organisation, in your opinion, what can IEEE Young Professionals do to prevent online hate?

The first step is being aware of it as an issue and consider it in light of the IEEE Code of Ethics. We each have a professional responsibility to “improve the understanding of technology; its appropriate application, and potential consequences”. Spreading awareness of the problem of online hate to co-workers, friends and family, and encouraging everyone to take proactive steps to minimize that harm would be a great start. Proactive steps can include reporting hate speech to the platform provider and to FightAgainstHate.com, but it can also include better moderation on pages people manage, and encouraging companies to have policies against the misuse of social media. I’d of course also welcome people to also join us on Facebook and get involved in our campaigns.

We each have a professional responsibility to “improve the understanding of technology; its appropriate application, and potential consequences”

Interview condu